Microsoft Azure Storage: Understand and Implement cloud storage solutions in real-world scenarios

Are you new to the cloud? Would you like a summary of some of the storage solutions offered by Microsoft Azure? Well, you’ve come to the right place. Join me on my journey through this blog post as I learn what Azure storage, Azure blobs and Azure files has to offer us as Cloud professionals.

This blog post will have two parts: The first part will be a walkthrough of the key components, features and possible scenarios of using Azure storage, Azure Files and Azure blobs. The main goal is to introduce us to the main Azure storage solutions and provide a solid base from which we can move to the second part.

The second part of this blog series will be an implementation of these three Azure cloud storage solutions in a real-world scenario. We will look into details of the various considerations a Cloud Administrator makes when configuring the Azure storage services according to organization, system and user needs. We will also implement a secure system design by looking at the various ways we can protect our storage resources and the data stored within them from unwanted access or data loss by restricting access and replication. A key focus will be to ensure that the 3 tenants of the C.I.A (Confidentiality, Integrity, Availability) triad are accounted for when it comes to securing our data and the systems that handles the data.

Part One: Azure Storage, Azure Files and Azure Blobs

Azure Storage is Microsoft’s cloud storage solution for modern data storage scenarios. It offers a massively scalable object store for data objects i.e. files, messages, tables and other types of information. Azure Storage is used by IaaS and PaaS cloud services and it can also be used to stores working data e.g. websites, mobile apps and applications.

Azure Storage supports three types of data:

- Structured data — Relational data with a shared schema i.e. data stored in tables with rows, columns and keys where the tables are and autoscaling NoSQL store e.g. Azure Table Storage, Azure Cosmos DB and Azure SQL Database.

- Unstructured data — Nonrelational data with no clear relationships e.g. data stored by Azure Blob Storage (Highly-scalable, REST-based cloud object store) and Azure Data Lake (Hadoop Distributed File System (HDFS) as a service).

- Virtual Machine data — VMs data storage includes disks (persistent block storage for Azure IaaS VMs) and files (fully managed file shares in the cloud) e.g. Azure Managed disks that stores DB files, website static content or custom application code.

Azure storage supports two account types:

- Standard — Hard disk drives (HDD). For tables, blobs queues and files. Low cost per GB. Best for bulk storage or infrequently accessed data.

- Premium — Solid-state drives (SSD). Only for blobs and files. Best for low-latency performance e.g. I/O intensive apps like databases. The premium account types can be further subdivided as follows:

- Premium block blobs: For block blobs and append blobs. Recommended for applications with high transaction rates thus needed consistently low storage latency i.e. Hight I/O throughput, low latency.

- Premium file shares: For file shares only. Recommended for enterprise or high-performance scale applications requiring SMB and NFS file shares support.

- Premium page blobs: For page blobs only which are ideal for storing index-based and sparse data structures, such as operating systems, data disks for virtual machines, and databases.

Azure Storage services

Azure provides the following storage solutions for use within the Azure Storage service:

- Azure Blob storage: A massively scalable object store for text and binary data e.g. images, videos, audio. It is also used for backup and restore, disaster recovery and archiving. Blob storage can also be accessed via the NFS (Network File System) protocol, TCP and UDP ports 111 and 2049.

- Azure Files: Managed file shares for cloud or on-premises deployments. Shares can be accessed by using the Server Message Block (SMB) protocol, TCP port 445 and the Network File System (NFS) protocol. Multiple virtual machines can share the same files with both read and write access. You can also read the files by using the REST interface or the storage client libraries. Best used when you want to store configuration files to be accessed by multiple VMs and easy migration of on-prem apps that use file shares to the cloud.

- Azure Queue Storage: A messaging store for reliable messaging between application components. Queue messages can be up to 64 KB in size, and a queue can contain millions of messages. Queues are used to store lists of messages to be processed asynchronously.

- Azure Table storage: A service that stores nonrelational structured data (also known as structured NoSQL data) in the cloud, providing a key/attribute store with a schemaless design. making it easy to adapt your data as the needs of your application evolve. Typically, lower cost than traditional SQL offerings of same volume.

NOTE: All storage account types are encrypted by using Storage Service Encryption (SSE) for data at rest.

Features of Azure Storage

Azure storage provides the following features and benefits to all of its solutions and users:

- Durability and high availability via redundancy.

- Secure access via encryption.

- Scalability.

- Managed by Microsoft that handles hardware maintenance, updates, and critical issues.

- Data accessibility globally via HTTP or HTTPs, SDKs, Azure PowerShell or Azure CLI, and Azure Portal.

Replication strategies

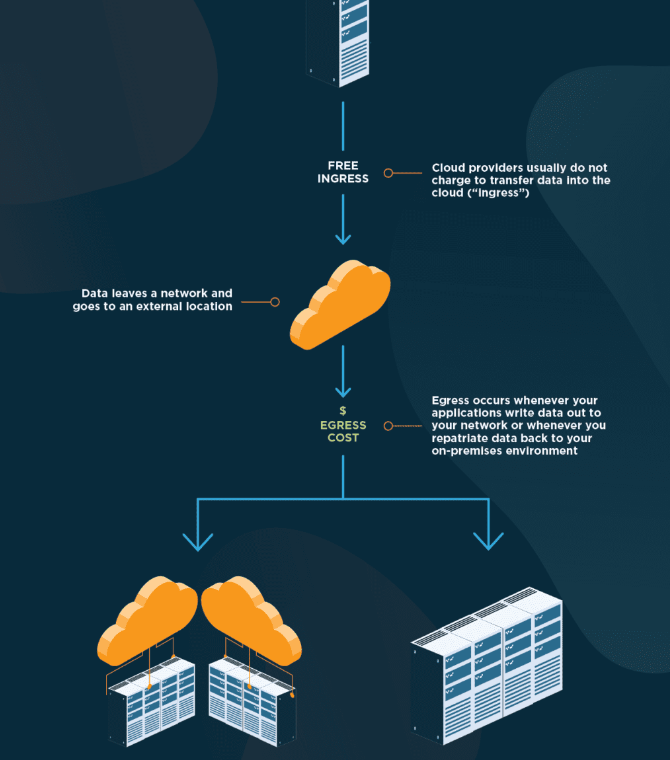

Replication is the process of creating copies of your databases at defined and specific time periods then proceeding to store these copies at various on-premises and/or cloud destinations. Replication is a key component of disaster recovery and business continuity of every organization. Azure provides the following replication options for the Azure storage service:

- Locally redundant storage (LRS): Data is replicated within the same datacenter three times. It provides the lowest cost and least durability in case a catastrophic datacenter event occurs. It is most suitable when your data is easy to reconstruct in case of loss, frequently changing data hose storage is not essential and to comply governance requirements that dictate the storage location of your data.

- Zone redundant storage (ZRS): Synchronously replicates your data across three availability zones in a single region. ZRS isn’t currently available in all regions. Changing to ZRS from another data replication option requires the physical data movement from a single storage stamp to multiple stamps within a region.

- Geo-redundant storage (GRS): Your data is replicated to a secondary region that is paired to your primary region beforehand by Microsoft. An update is first committed to the primary location and replicated by using LRS. The update is then replicated asynchronously to the secondary region by using GRS. Data in the secondary region uses LRS. The data is available to be read only if Microsoft initiates a failover from the primary to secondary region. RA-GRS (Read-access) gives you the option to read from the secondary region regardless of whether Microsoft initiates a failover from the primary to the secondary.

- Geo-zone redundant storage (GZRS): Data is replicated across three Azure availability zones in the primary region i.e. ZRS, and also replicated to a secondary geographic region for protection from regional disasters i.e. via LRS. You can optionally enable read access to data in the secondary region with read-access geo-zone-redundant storage (RA-GZRS).

Azure Storage Security

Administrators use different strategies to ensure their data is secure. Common approaches include encryption, authentication, authorization, and user access control with credentials, file permissions, and private signatures. Azure Storage offers a suite of security capabilities based on common strategies to help you secure your data.

- Encryption: All data written to Azure Storage is automatically encrypted by using Azure Storage encryption.

- Authentication: Microsoft Entra ID and role-based access control (RBAC) are supported for Azure Storage for both resource management operations and data operations.

- Assign RBAC roles scoped to an Azure storage account to security principals, and use Microsoft Entra ID to authorize resource management operations like key management.

- Microsoft Entra integration is supported for data operations on Azure Blob Storage and Azure Queue Storage.

- Data in Transit: Data can be secured in transit between an application and Azure by using Client-Side Encryption, HTTPS, or SMB 3.0.

- Disk encryption: Operating system disks and data disks used by Azure Virtual Machines can be encrypted by using Azure Disk Encryption.

- Shared access signatures: Delegated access to the data objects in Azure Storage can be granted by using a shared access signature (SAS).

- Authorization: Every request made against a secured resource in Blob Storage, Azure Files, Queue Storage, or Azure Cosmos DB (Azure Table Storage) must be authorized. Authorization ensures that resources in your storage account are accessible only when you want them to be, and to only those users or applications whom you grant access.

Authorization strategies for Azure storage

- Microsoft Entra ID: Microsoft Entra ID is Microsoft’s cloud-based identity and access management service. With Microsoft Entra ID, you can assign fine-grained access to users, groups, or applications by using role-based access control (RBAC).

- Shared Key: Shared Key authorization relies on your Azure storage account access keys and other parameters to produce an encrypted signature string. The string is passed on the request in the Authorization header. This gives the users access to all the resources whose keys the user has the shared key.

- Shared access signatures: A SAS delegates access to a particular resource in your Azure storage account with specified permissions and for a specified time interval without compromising your account keys.

- Anonymous access to containers and blobs: You can optionally make blob resources public at the container or blob level. A public container or blob is accessible to any user for anonymous read access. Read requests to public containers and blobs don’t require authorization.

Secure storage endpoints

To secure storage endpoints, consider the following:

- The Firewalls and virtual networks settings restrict access to your storage account from specific subnets on virtual networks or public IPs.

- You can configure the service to allow access to one or more public IP ranges.

- Subnets and virtual networks must exist in the same Azure region or region pair as your storage account.

Azure Blobs

Azure Blob Storage is a service that stores unstructured data in the cloud as objects or blobs. Blob stands for Binary Large Object. Blob Storage is also referred to as object storage or container storage.

Characteristics of Blob Storage:

- Can store any type of text or binary data. Some examples are text documents, images, video files, and application installers.

- Uses three resources to store and manage your data:

- An Azure storage account.

- Containers in an Azure storage account.

- Blobs in a container.

- To implement Blob Storage, you configure several settings:

- Blob container options.

- Blob types and upload options.

- Blob Storage access tiers.

- Blob lifecycle rules.

- Blob object replication options.

Uses for Blob storage

- Serve images or documents directly to a browser.

- Store files for distributed access, such as during an installation process.

- Stream video and audio.

- Storing data for backup and restore, disaster recovery, and archiving.

- Application access by on-prem or Azure-hosted service.

Blob Containers

- All blobs must be in a container.

- A container can store an unlimited number of blobs.

- An Azure storage account can contain an unlimited number of containers.

- You can create the container in the Azure portal.

- You upload blobs into a container.

Configure a container

You can configure two settings:

Name: The name must be unique within the Azure storage account.

- The name can contain only lowercase letters, numbers, and hyphens.

- The name must begin with a letter or a number.

- The minimum length for the name is three characters.

- The maximum length for the name is 63 characters.

Public Access Level: The access level specifies whether the container and its blobs can be accessed publicly. By default, container data is private and visible only to the account owner. There are three access level choices:

- Private: (Default) Prohibit anonymous access to the container and blobs.

- Blob: Allow anonymous public read access for the blobs only.

- Container: Allow anonymous public read and list access to the entire container, including the blobs.

Blob Access Tiers

The follow are the available access tiers that are assigned to blob object data and should be considered when you are managing the lifecycle of your data:

- Hot Tier: Optimized for frequent reads and writes of objects. Usage case is data that is actively being processed. It has the lowest access costs, but higher storage costs than the Cool and Archive tiers.

- Cool Tier: Optimized for storing large amounts of data that’s infrequently accessed but needs to be immediately available. Intended for data that remains in the Cool tier for at least 30 days. A usage case for the Cool tier is short-term backup and disaster recovery datasets and older media content. More cost-effective to store but higher accessing costs than Hot Tier.

- Cold Tier: Optimized for storing large amounts of data that’s infrequently accessed. This tier is intended for data that can remain in the tier for at least 90 days.

- Archive Tier: Offline tier that’s optimized for data that can tolerate several hours of retrieval latency. Data must remain in the Archive tier for at least 180 days or be subject to an early deletion charge. Data for the Archive tier includes secondary backups, original raw data, and legally required compliance information. This tier is the most cost-effective option for storing data. Accessing data is more expensive in the Archive tier than accessing data in the other tiers.

Azure Files

Azure Files offers shared storage for applications by using the industry standard Server Message Block and Network File System (NFS) protocols. Azure virtual machines (VMs) and cloud services can share file data across application components by using mounted shares. On-premises applications can also access file data in the share.

Characteristics of Azure Files

- Azure Files stores data as true directory objects in file shares.

- Azure Files provides shared access to files across multiple VMs. Any number of Azure virtual machines or roles can mount and access an Azure file share simultaneously.

- Applications that run in Azure VMs or cloud services can mount an Azure file share to access file data. This process is similar to how a desktop application mounts a typical SMB share.

- Azure Files offers fully managed file shares in the cloud. Azure file shares can be mounted concurrently by cloud or on-premises deployments of Windows, Linux, and macOS.

Scenarios for using Azure Files

- Replace or supplement traditional on-premises file servers or NAS devices by using Azure Files.

- Directly access Azure file shares by using most operating systems, such as Windows, macOS, and Linux, from anywhere in the world.

- Lift and shift applications to the cloud with Azure Files for apps that expect a file share to store file application or user data.

- Replicate Azure file shares to Windows Servers by using Azure File Sync. You can replicate on-premises or in the cloud for performance and distributed caching of the data where it’s being used.

- Store shared application settings such as configuration files in Azure Files.

- Use Azure Files to store diagnostic data such as logs, metrics, and crash dumps in a shared location.

- Azure Files is a good option for storing tools and utilities that are needed for developing or administering Azure VMs or cloud services.

Soft delete for Azure Files

Soft delete lets you recover deleted files and file shares.

Characteristics of soft delete for Azure files

- Soft delete for file shares is enabled at the storage account level.

- Soft delete transitions content to a soft deleted state instead of being permanently erased.

- Soft delete lets you configure the retention period. The retention period is the amount of time that soft deleted file shares are stored and available for recovery.

- Soft delete provides a retention period between 1 and 365 days.

- Soft delete can be enabled on either new or existing file shares.

- Soft delete doesn’t work for Network File System (NFS) shares.

Advantages to using soft delete for Azure files

- Recovery from accidental data loss. Use soft delete to recover data that is deleted or corrupted.

- Upgrade scenarios. Use soft delete to restore to a known good state after a failed upgrade attempt.

- Ransomware protection. Use soft delete to recover data without paying ransom to cybercriminals.

- Long-term retention. Use soft delete to comply with data retention.

- Business continuity. Use soft delete to prepare your infrastructure to be highly available for critical workloads.

Part Two: Lab Considerations and Setup

In this lab, we are going to use the scenario provided in Microsoft’s learning module “Secure storage for Azure Files and Azure Blob storage”. We will create and configure storage solutions for the following use cases:

- Storage for testing and training for an IT department,

- Storage for a public website,

- Private storage for internal company documents,

- Shared file storage that can be accessed by remote offices,

- Storage for an Azure App.

This lab will require that we configure storage accounts, Azure blobs, Azure Files, storage encryption and storage networking.

NOTE: It is important to remember that you need one storage account for each group of settings that you want to apply to your data. E.g. You should have two different storage accounts that differ in only one setting i.e. Location, Billing and Replication strategy.

The following factors determine the number of storage accounts you need:

- Data Diversity: Where data is consumed, how sensitive it is, which group pays the bills etc. Consider the following two scenarios:

- Do you have data that is specific to a country/region? If so, you might want to store the data in a datacenter in that country/region for performance or compliance reasons. You need one storage account for each geographical region.

- Do you have some data that is proprietary and some for public consumption? If so, you could enable virtual networks for the proprietary data and not for the public data. Separating proprietary data and public data requires separate storage accounts.

- Cost Sensitivity: A storage account by itself has no financial cost; however, the settings you choose for the account do influence the cost of services in the account. E.g. LRS costs less than GRS. You can put your critical data in GRS and less critical data in LRS hence two storage accounts.

- Tolerance for management overhead: Everyone in an administrator role needs to understand the purpose of each storage account so they add new data to the correct account.

Prerequisites

For you to implement the steps below in this lab, it is a requirement that you have an Azure subscription before we continue. Azure offers a Free Tier for new accounts where you get access to multiple Azure resources for free for 30 days and $200 credit, whichever comes first. You can open a free account on Azure by following this link https://azure.microsoft.com/en-us/pricing/purchase-options/azure-account?icid=azurefreeaccount. Thereafter, you need to subscribe to a Pay-as-you-go subscription where you get access to a few common Azure resources for 12 months and pay for your usage for resources not available for free. If you already have an Azure Pay-as-you-go subscription, login to your Azure portal here https://portal.azure.com/

Task 1: Provide storage for the IT department testing and training

The IT department needs to prototype different storage scenarios and to train new personnel. The content isn’t important enough to back up and doesn’t need to be restored if the data is overwritten or removed. A simple configuration that can be easily changed is desired.

Architecture diagram

Tasks required

- Create a storage account.

- Configure basic settings for security and networking.

Create a resource group and a storage account.

Create and deploy a resource group to hold all your project resources. This allows easier management of our resources as some configuration settings

- In the Azure portal, search for and select Resource groups.

- Select + Create.

- Give your resource group a name. In my case, I used Storage-RG.

- Select a region. Use this region throughout the project. In my case, I used East US.

- Select Review + create to validate the resource group.

- Select Create to deploy the resource group. The resource group should look like this when you check on the Overview page.

2. Create and deploy a storage account to support testing and training.

- In the Azure portal, search for and select Storage accounts. There may be an option Storage account(classic), make sure not to select it.

- Select + Create.

- On the Basics tab, select your Resource group.

- Provide a Storage account name. The storage account name must be unique in Azure.

- Set the Performance to Standard.

- Select Review + create, and then Create.

- Wait for the storage account to deploy and then Go to resource.

Configure simple settings in the storage account.

- The data in this storage account doesn’t require high availability or durability. A lowest cost storage solution is desired. We therefore need to set the redundancy to LRS from the Azure default of GRS.

Take note that we could have completed this setup when creating the Storage account.

- In your storage account, in the Data management section, select the Redundancy blade.

- Select Locally-redundant storage (LRS) in the Redundancy drop-down.

- Be sure to Save your changes.

- Refresh the page and notice the content only exists in the primary location.

2. The storage account should only accept requests from secure connections as recommended by Microsoft. This essentially means that any REST API call to Azure storage must be done over HTTPS, TCP port 443 otherwise the connection fails.

- In the Settings section, select the Configuration blade.

- Ensure Secure transfer required is Enabled.

The only exception is when you’re using NFS Azure file shares where the Secure transfer required property must be disabled for them to work.

3. Developers would like the storage account to use at least TLS version 1.2 to encrypt the communication between the client application and the Azure Storage account.

Transport Layer Security (TLS) is a standard cryptographic protocol that ensures privacy and data integrity between clients and services over the internet. TLS 1.2 is the latest version of the protocol that has the latest security patches making it the safest bet for the organization.

- In the Settings section, select the Configuration blade.

- Ensure the Minimal TLS version is set to Version 1.2.

4. Until the storage is needed again, disable requests to the storage account. As discussed above, the Shared Key access authorization gives the users with the key unlimited access to the resources. In our use case, this is not optimal for securing the storage account. We will disallow key-based authorization on the storage account. Take not this will also disallow SAS requests to access our storage account.

- In the Settings section, select the Configuration blade.

- Ensure Allow storage account key access is Disabled.

- Be sure to Save your changes.

5. The storage account cannot be accessed by our students who are not authorized. We have taken out the need for the users to have keys or signatures to access the content stored securely. However, this does not help our use case which would require access of the organization training material stored in the storage account. Then again, we cannot just let our data unprotected hence we have set the requirement to ensure that communication between our clients and server uses TLS encryption.

To ensure the storage account allows public access from all networks to meet our needs:

- In the Security + networking section, select the Networking blade.

- Ensure Public network access is set to Enabled from all networks.

- Be sure to Save your changes.

Task 2: Provide storage for the public website

The company website supplies product images, videos, marketing literature, and customer success stories. Customers are located worldwide and demand is rapidly expanding. The content is mission-critical and requires low latency load times. It’s important to keep track of the document versions and to quickly restore documents if they’re deleted.

Architecture diagram

Tasks required

- Create a storage account with high availability since it has mission critical data that needs to be online all the time.

- Ensure the storage account has anonymous public access.

- Create a blob storage container for the website documents i.e. images, videos, marketing literature, and customer success stories.

- Enable soft delete so files can be easily restored to allow the data to be quickly restored if accidentally deleted.

- Enable blob versioning to keep track of documents versions for purposes such as disaster recovery, backup and allow reverting to previous versions as need be.

Create a storage account with high availability.

- Create a storage account to support the public website.

- In the portal, search for and select Storage accounts.

- Select + Create.

- For resource group select the initial resource group Storage-RG.

- Set the Storage account name to publicwebsitestorage01.

- Take the defaults for other settings.

- Select Review + create and then Create.

- Wait for the storage account to deploy, and then select Go to resource.

2. This storage requires high availability if there’s a regional outage. As discussed above in Part 1, we need to set the redundancy option to RA-GRS to have the option to read from the secondary region regardless of whether Microsoft initiates a failover from the primary to the secondary.

- In the storage account, in the Data management section, select the Redundancy blade.

- Ensure Read-access Geo-redundant storage is selected.

- Review the primary and secondary location information.

3. Information on the public website should be accessible without requiring customers to login.

- In the storage account, in the Settings section, select the Configuration blade.

- Ensure the Allow blob anonymous access setting is Enabled.

- Be sure to Save your changes.

Create a blob storage container with anonymous read access

- The public website has various images and documents stored as blobs. Blobs are stored in containers which will then be stored in our storage account. Let’s create a blob storage container for the content.

- In your storage account, in the Data storage section, select the Containers blade

- Select + Container.

- A right pane will appear where you will enter the name of the storage account as publicwebcontent. The requirement states that this content must be accessible by the public but you can’t enable public access as the storage account has anonymous access disabled.

- To fix this, click on Create on the left pane. Then in the Settings section of the publicwebsitestorage01 storage account and click on Configuration.

- Enable Allow Blob anonymous access as shown below. From the screenshot you can read the explanation provided by Microsoft.

- Ensure you Save your settings. Then click on Containers in the Data Storage section. Here you’ll see the container publicwebcontent that you have created.

- Click on it to open its overview page. Click on the Change access level button and select Blobs (anonymous read access for blobs only). This will allow users to be able to view blob images without being authenticated.

- Click OK.

Practice uploading files and testing access.

- For testing, upload a file to the public container. The type of file doesn’t matter. A small image or text file is a good choice.

- Ensure you are viewing your container.

- Select Upload.

- Browse for files and select a file. Browse to a file of your choice and select it.

- Select Upload.

- Your uploaded file will appear. If not, Refresh the page and ensure your file was uploaded.

2. Determine the URL for your uploaded file. Open a browser and test the URL to make sure it is accessible.

- Select your uploaded file.

- On the Overview tab, copy the URL.

- Paste the URL into a new browser tab.

- If you have uploaded an image file it will display in the browser. Other file types should be downloaded.

Configure soft delete

- It’s important that the website documents can be restored if they’re deleted accidentally or erroneously. Azure’s solution for the is called Soft Delete. When container soft delete is enabled for a storage account, a container and its contents may be recovered after it has been deleted, within a retention period that you specify.

Configure blob soft delete for 21 days.

- Go to the Overview blade of the storage account.

- Scroll down to see the Properties page and ocate the Blob service section.

- Select the Blob soft delete setting.

- Ensure the Enable soft delete for blobs is checked.

- Change the Keep deleted blobs for (in days) setting to 21.

- Notice you can also Enable soft delete for containers.

- Don’t forget to Save your changes.

2. If something gets deleted, you need to practice using soft delete to restore the files.

- Navigate to your container where you uploaded a file.

- Select the file you uploaded and then select Delete.

- Select Delete to confirm deleting the file.

- On the container Overview page, toggle the slider Show deleted blobs. This toggle is to the right of the search box.

- Select your deleted file, and use the ellipses on the far right, to Undelete the file.

- Close the blob menu and confirm the file has been restored.

Configure blob versioning

- It’s important to keep track of the different website product document versions. Enabling blob storage versioning allows us to maintain previous versions of a blob when it is either modified or accidentally deleted. This gives us the ability to restore an earlier version of a blob to recover your data whenever necessary.

- Go to the Overview blade of the storage account.

- In the Properties section, locate the Blob service section.

- Select the Versioning setting.

- Ensure the Enable versioning for blobs checkbox is checked.

- Notice your options to keep all versions or delete versions after.

- Don’t forget to Save your changes.

Task 3: Provide private storage for internal company documents

The company needs storage for their offices and departments. This content is private to the company and shouldn’t be shared without consent. This storage requires high availability if there’s a regional outage. The company wants to use this storage to back up the public website.

Architecture diagram

Tasks requir’ed

- Create a storage account for the company private documents following the principle of creating as many storage accounts as needed when the settings we want to apply to our data differ.

- Configure redundancy for the storage account to ensure our data is always available even during a disaster is one part of the world.

- Configure a shared access signature so partners have restricted access to a file to provide the users with access to the specified resources.

- Back up the public website storage.

- Implement lifecycle management to move content to the cool tier since as time goes by, frequent access to the data won’t be as frequent.

Create a storage account and configure high availability.

- Create a storage account for the internal private company documents.

- In the portal, search for and select Storage accounts.

- Select + Create.

- Select the Resource group created in the previous lab and name the storage account privatecompanystorage01.

- Click on the Networking tab and set the network access option to Disable public access and use private access.

- Select Review + create, and then Create the storage account.

- Wait for the storage account to deploy, and then select Go to resource.

2. This storage requires high availability if there’s a regional outage. Read access in the secondary region is not required. Configure the appropriate level of redundancy.

- In the storage account, in the Data management section, select the Redundancy blade.

- Ensure Geo-redundant storage (GRS) is selected.

- Refresh the page.

- Review the primary and secondary location information.

- Save your changes.

Create a storage container, upload a file, and restrict access to the file.

- Create a private storage container for the corporate data.

- In the storage account, in the Data storage section, select the Containers blade.

- Select + Container.

- Enter the Name of the container, privatecompanycontent.

- Ensure the Public access level is Private (no anonymous access).

- Select Create.

2. For testing, upload a file to the private container. The type of file doesn’t matter. A small image or text file is a good choice. Test to ensure the file isn’t publicly accessible.

- Select the privatecompanycontent container.

- Select Upload. You will get an error screen stating that you are not authorized to perform this action. This is because we set access to this storage account to private.

- We have two options to fix this:

- Create a private IP address space that will be allowed to access the storage account. This would require a lot of work including creating a virtual network in Azure then connecting your home computer to it via a point-to-site private network VPN.

- A simpler method, instead of denying public access outright, we can enable select IP addresses to allow us to add the public address of your local machine to the allowed list. This is the option we’ll go for.

- Select Networking in the Security + networking section.

- For public access, select the option Enabled from selected virtual networks or IP addresses.

- In the Firewall section, enable the Add your client IP address option.

- Click on Save.

- Select the privatecompanycontent container. It should now open.

- Select Upload.

- Browse for files and select a file.

- Upload the file.

- Select the uploaded file.

- On the Overview tab, copy the URL.

- Paste the URL into a new browser tab.

- Verify the file doesn’t display and you receive an error.

3. An external partner requires read and write access to the file for at least the next 24 hours. Configure and test a shared access signature (SAS).

- Select your uploaded blob file and move to the Generate SAS tab.

- In the Permissions drop-down, ensure the partner has only Read permissions.

- Verify the Start and expiry date/time is for the next 24 hours.

- Select Generate SAS token and URL.

- Copy the Blob SAS URL to a new browser tab.

- Verify you can access the file. If you have uploaded an image file it will display in the browser. Other file types will be downloaded.

Configure storage access tiers and content replication.

- To save on costs, after 30 days, move blobs from the hot tier to the cool tier. Lifecycle management offers a rule-based policy that you can use to transition blob data to the appropriate access tiers or to expire data at the end of the data lifecycle.

- Return to the storage account, privatecomapnystorage1.

- In the Overview section, notice the Default access tier is set to Hot in the Properties section, Default access tier option.

- In the Data management section, select the Lifecycle management blade.

- Select Add rule.

- Set the Rule name to movetocool.

- Set the Rule scope to Apply rule to all blobs in the storage account.

- Select Next.

- Ensure Last modified is selected.

- Set More than (days ago) to 30.

- In the Then drop-down select Move to cool storage.

- Take note of the other lifecycle options in the drop-down.

- Add the rule.

2. The public website files need to be backed up to another storage account. Object replication asynchronously copies block blobs between a source storage account and a destination account. When you configure object replication, you create a replication policy that specifies the source storage account and the destination account. A replication policy can include one or more rules and should indicate which blocs in a source container will be replicated.

- In your storage account, create a new container called backup. Use the default values. Refer back to Task 02 if you need detailed instructions.

- Navigate to your publicwebsitestorage01 storage account. This storage account was created in the previous task.

- In the Data management section, select the Object replication blade.

2. Select Create replication rules.

3. Set the Destination storage account to the privatecompanystorage01 storage account.

4. Set the Source container to publicwebcontent and the Destination container to backup.

5. Create the replication rule.

- To test that our backup works, upload a file to the public container. Return to the private storage account and refresh the backup container. Within a few minutes your public website file will appear in the backup folder.

Task 4: Provide shared file storage for the company offices

The company is geographically dispersed with offices in different locations. These offices need a way to share files and disseminate information. For example, the Finance department needs to confirm cost information for auditing and compliance. This file shares should be easy to access and load without delay. Some content should only be accessed from selected corporate virtual networks.

Architecture diagram

Tasks required

- Create a storage account specifically for file shares in keeping with our principle.

- Configure a file share and directory to store the data in a central repository that can be accessed by multiple users in different locations.

- Configure snapshots to store versions of data at that particular point in time and practice restoring files.

- Restrict access to a specific virtual network and subnet.

Create and configure a storage account for Azure Files.

- Create a storage account for the finance department’s shared files. Learn more about storage accounts for Azure Files deployments.

- In the portal, search for and select Storage accounts.

- Select + Create.

- For Resource group, select the resource group we’ve been using for this lab.

- Provide a Storage account name e.g. companyfilesstorage01

- Set the Performance to Premium.

- Set the Premium account type to File shares. This will ensure higher latency speeds.

- Set the Redundancy to Zone-redundant storage.

- Select Review + create and then Create the storage account.

- Wait for the resource to deploy.

- Select Go to resource.

Create and configure a file share with directory.

- Create a file share for the corporate office.

- In the storage account, in the Data storage section, select the File shares blade.

- Select + File share and provide a Name e.g. companyinternalfiles.

- Review the other options, but take the defaults.

- Select Review + Create.

- Select Create.

2. Add a directory to the file share for the finance department. For future testing, upload a file.

- Select your file share and select + Add directory.

- Name the new directory finance.

- Select Browse on the left pane and then select the finance directory.

- Notice you can Add directory to further organize your file share.

- Upload a file of your choosing.

Configure and test snapshots.

- Similar to blob storage, we need to protect against accidental deletion of files. We decide to use snapshots which capture the share state at that point in time.

- Navigate to your file share Overview page.

- In the Operations section, select the Snapshots blade.

- Select + Add snapshot. The comment is optional. Select OK.

- Select your snapshot and verify your file directory and uploaded file are included.

2. Practice using snapshots to restore a file.

- Navigate to your file share Overview page.

- Browse to your file directory.

- Locate your uploaded file and in the Properties pane select Delete. Select Yes to confirm the deletion.

- Select the Snapshots blade and then select your snapshot.

- Navigate to the file you want to restore.

- Select the file and the select Restore.

- Provide a Restored file name e.g. Linux_Commands_Restored.

- Verify your file directory has the restored file.

Configure restricting storage access to selected virtual networks.

- This tasks in this section require a virtual network with subnet. In a production environment these resources would already be created.

- Search for and select Virtual networks in the Azure search box.

- Select Create. Select your resource group. and give the virtual network the name privatenet01.

- Take the defaults for other parameters, select Review + create, and then Create.

- Wait for the resource to deploy.

- Select Go to resource.

- In the Settings section, select the Subnets blade.

- Select the default subnet.

- In the Service endpoints section choose Microsoft.Storage in the Services drop-down.

- Do not make any other changes.

- Be sure to Save your changes.

2. The storage account should only be accessed from the virtual network you just created. We implement this by using private storage endpoints which allow secure data access over a Private Link. The private endpoint uses a separate IP address from the VNet address space for each storage account service. Network traffic between the clients on the VNet and the storage account traverses over the VNet and a private link on the Microsoft backbone network, eliminating exposure from the public internet.

- Return to your companyfilesstorage01 storage account.

- In the Security + networking section, select the Networking blade.

- Change the Public network access to Enabled from selected virtual networks and IP addresses.

- In the Virtual networks section, select Add existing virtual network.

- Select your virtual network and subnet, select Add.

- Be sure to Save your changes.

- Select the Storage browser and navigate to your file share. Select Browse to view your file share.

- Verify the message not authorized to perform this operation. You are not connecting from the virtual network.

Task 5: Provide storage for a new company app

The company is designing and developing a new app. Developers need to ensure the storage is only accessed using keys and managed identities. The developers would like to use role-based access control. To help with testing, protected immutable storage is needed.

Architecture diagram

Tasks required

- Create the storage account and managed identity.

- Secure access to the storage account with a key vault and key.

- Configure the storage account to use the customer managed key in the key vault

- Configure a time-based retention policy and an encryption scope.

Create the storage account and managed identity

- Provide a storage account for the web app.

- In the portal, search for and select Storage accounts.

- Select + Create.

- For Resource group select the Storage-RG we’ve been using.

- Provide a Storage account name i.e. companyppstorage01.

- Move to the Encryption tab.

- Check the box for Enable infrastructure encryption. Notice the warning “This option cannot be changed after this storage account is created”.

By default, Azure encrypts storage account data at rest. Infrastructure encryption adds a second layer of encryption to your storage account’s data.

- Select Review + Create, then select Create.

- Wait for the resource to deploy.

2. Provide a managed identity for the web app to use. Managed Identities eliminates the need for developers to manage credentials, keys, certificates and secrets used to secure communication between services.

Azure Key Vault is the service used to store these secrets. It uses Microsoft Entra ID to authenticate apps and users trying to access a vault. To grant our web app access to the vault, you first need to register the app with Microsoft Entra ID, creating an identity for the app that allows you to assign vault permissions to it.

Apps and users authenticate to Key Vault using a Microsoft Entra authentication token. Getting a token from Microsoft Entra ID requires a secret or certificate. Anyone with a token could use the app identity to access all of the secrets in the vault. This approach could result in a Bootstrapping problem i.e. All your secrets are stored securely in the key vault but you still need to keep a secret or certificate outside of the vault to access them. The solution to this is Managed Identities.

Managed identities for Azure resources is an Azure feature your app can use to access Key Vault and other Azure services without having to manage a single secret outside of the vault. When you enable managed identity on your web app, Azure activates a separate token-granting REST service specifically for your app to use. Your app requests tokens from this service instead of directly from Microsoft Entra ID. Your app needs to use a secret to access this service, but that secret is injected into your app’s environment variables by App Service when it starts up. You don’t need to manage or store this secret value anywhere, and nothing outside of your app can access this secret or the managed identity token service endpoint

- Search for and select Managed identities.

- Select Create.

- Select your resource group.

- Give your managed identity a name i.e. webapp-MI.

- Select Review and create, and then Create.

3. Assign the correct permissions to the managed identity. The identity only needs to read and list containers and blobs.

Azure role-based access control (Azure RBAC) is the authorization system you use to manage access to Azure resources. To grant access, you assign roles to users, groups, service principals, or managed identities at a particular scope. You need to have the Microsoft.Authorization/roleAssignments/write permissions, such as Role Based Access Control Administrator or User Access Administrator to proceed with the following steps:

- Search for and select your storage account, companyappstorage01.

- Select the Access Control (IAM) blade.

- Select Add role assignment (center of the page).

- On the Job functions roles page, search for and select the Storage Blob Data Reader role.

- On the Members page, select Managed identity.

- Select Select members, in the Managed identity drop-down select User-assigned managed identity.

- Select the managed identity you created in the previous step.

- Click Select and then Review + assign the role.

- Select Review + assign a second time to add the role assignment.

- Your storage account can now be accessed by a managed identity with the Storage Data Blob Reader permissions.

Secure access to the storage account with a key vault and key

- To create the key vault and key needed for this part of the lab, your user account must have Key Vault Administrator permissions.

- In the portal, search for and select Resource groups.

- Select your resource group, Storage-RG and then the Access Control (IAM) blade.

- Select Add role assignment (center of the page).

- On the Job functions roles page, search for and select the Key Vault Administrator role.

- On the Members page, select User, group, or service principal.

- Select Select members.

- Search for and select your user account. Your user account is shown in the top right of the portal.

- Click Select and then Review + assign.

- Select Review + assign a second time to add the role assignment.

- You are now ready to continue with the lab.

2. Create a key vault to store the access keys.

- In the portal, search for and select Key vaults.

- Select Create.

- Select your resource group.

- Provide the name for the key vault. E.g. companyapp-kv.

- Ensure on the Access configuration tab that Azure role-based access control (recommended) is selected. This enables authorization tokens for Microsoft Entra ID be used to access the vault’s secrets from the authorized webapp.

- Select Review + create.

- Wait for the validation checks to complete and then select Create.

- After the deployment, select Go to resource.

- On the Overview blade ensure both Soft-delete and Purge protection (Enforce a mandatory retention period for deleted vaults and vault objects) are enabled.

3. Create a customer-managed key in the key vault.

- In your key vault, in the Objects section, select the Keys blade.

- Select Generate/Import.

- Name the key, companyappaccessstorage-key, and take the defaults for the rest of the parameters, and Create the key.

Configure the storage account to use the customer managed key in the key vault

- Before you can complete the next steps, you must assign the Key Vault Crypto Service Encryption User role to the managed identity.

A system-assigned managed identity is associated with an instance of an Azure service, in this case an Azure Storage account. You must explicitly assign a system-assigned managed identity to a storage account before you can use the system-assigned managed identity to authorize access to the key vault that contains your customer-managed key.

- In the portal, search for and select Resource groups.

- Select your resource group, and then the Access Control (IAM) blade.

- Select Add role assignment (center of the page).

- On the Job functions roles page, search for and select the Key Vault Crypto Service Encryption User role.

- On the Members page, select Managed identity.

- Select Select members, in the Managed identity drop-down select User-assigned managed identity.

- Select your managed identity the click Select.

- Click Review + assign.

- Select Review + assign a second time to add the role assignment.

2. Configure the storage account to use the customer managed key in your key vault.

- Return to the storage account, compnayappstorage01.

- In the Security + networking section, select the Encryption blade

- Select Customer-managed keys.

- Scroll down to Key Selection section and click on Select a key vault and key.

- Select the Key Store type to Key Vault then proceed to select your key vault and key.

- Select to confirm your choices.

- Ensure the Identity type is selected to User-assigned.

- Click on Select an identity.

- Select your managed identity then select Add.

- Save your changes.

- If you receive an error that your identity does not have the correct permissions, wait a minute and try again.

Take note of the summary of what we have accomplished so far in task 5 above:

- You will have complete operational access to the Key Vault and the secrets stored as the Key admin via your Azure account managed by Microsoft Entra ID. This allows you to add the secret companyappaccessstorage-key.

- However, for the app to access the data stored in the container companystorage01, it has to first access the Key Vault via its Managed Identity webapp-MI which is assigned the Key Vault Crypto Service Encryption User role and then finally proceed get access to the secrets stored within need to access the data in companystorage01 via in the Managed Identity webapp-MI which we have assigned the Storage Blob Data Reader role.

- Take note of the web app will have one managed identity which will then be assigned multiple roles to access different resources via Azure RBAC and Identity and Access Management.

Configure a time-based retention policy and an encryption scope.

- The developers require a storage container where files can’t be modified, even by the administrator. Immutable storage for Azure Blob Storage enables users to store business-critical data in a WORM (Write Once, Read Many) state. While in a WORM state, data can’t be modified or deleted for a user-specified interval. By configuring immutability policies for blob data, you can protect your data from overwrites and deletes.

Immutable storage for Azure Blob Storage supports two types of immutability policies that can be set at the same time as each other:

a) Time-based retention policies: With a time-based retention policy, users can set policies to store data for a specified interval. When a time-based retention policy is set, objects can be created and read, but not modified or deleted. After the retention period has expired, objects can be deleted but not overwritten.

b) Legal hold policies: A legal hold stores immutable data until the legal hold is explicitly cleared. When a legal hold is set, objects can be created and read, but not modified or deleted.

- Navigate to your storage account.

- In the Data storage section, select the Containers blade.

- Create a container called hold. Take the defaults.

- Upload a file to the container.

- In the Settings section, select the Access policy blade.

- In the Immutable blob storage section, select + Add policy.

- For the Policy type, select time-based retention.

- Set the Retention period to 5 days.

- Be sure to Save your changes.

- Try to delete the file in the container.

- Verify you are notified failed to delete blobs due to policy.

2. The developers require an encryption scope that enables infrastructure encryption.

Azure Storage automatically encrypts all data in a storage account at the service level using 256-bit AES with GCM mode encryption, one of the strongest block ciphers available, and is FIPS 140–2 compliant. Customers who require higher levels of assurance that their data is secure can also enable 256-bit AES with CBC encryption at the Azure Storage infrastructure level for double encryption. Double encryption of Azure Storage data protects against a scenario where one of the encryption algorithms or keys might be compromised. In this scenario, the additional layer of encryption continues to protect your data.

- Navigate back to your storage account.

- In the Security + networking blade, select Encryption.

- In the Encryption scopes tab, select Add.

- Give your encryption scope a name i.e. doubleencryptstore.

- The Encryption type is Microsoft-managed key.

- See that the Infrastructure encryption option is set to Enable (This was part of the configurations we made when configuring this storage account).

- Create the encryption scope.

- Return to your storage account and create a new container.

- Notice in the Advanced section you can select the Encryption scope you created and apply it to all blobs in the container option.

- Click on create.

Lab cleanup

To clean up the subscription, delete resource group Storage-RG.